When we disco 三渲二

介绍了将 When we disco 三渲二的历程主要步骤与相关问题,成功的有 AnimeGANv3 和 DCT-Net。文章全文偏长,没用的失败经历居多;如时间紧迫,只看上述成功的两节即可。

动机

在B站上看到别人把衿儿和粒粒跳的和嘉然和向晚跳的 When we disco 舞混剪,并冠以“三渲二”的名头。又发现 B 站上还没有衿使用 GAN 生成动画化衿儿和粒粒跳舞视频的实例,只在贴吧上看到过有画师将他们跳的舞画下来,而且画得还挺好看。想借助 GAN 动画化视频并投稿至 B 站的念头由此萌生。

使用 Deep Dream Generator (失败)

通过百度得知deepdreamgenerator是一个知名的风格迁移网站,可以轻松地对某一张照片进行操作,于是我注册了一个 deepdream 账户。进入网页后很快发现了两个问题:

- 服务器架设在国外,国内访问速度慢。

- 网页上的模型只支持单张照片而不支持一整个视频。

使用 AnimeGANv2 (失败)

我拿到这个问题后最先想到的其实就是PaddlePaddle,因为之前在PaddlePaddle上做过类似的项目,一整个体验非常不错。于是果不其然我找到了PaddleHub一键视频动漫化这个项目。

paddle 代码

0、BUG

在生成新版本时候,空文件夹无法加入,即使加入成功后,别人fork也无法显示。望修复,谢谢:)

1 | !mkdir -p work/mp4_img work/mp4_img3 work/output |

1、安装PaddleHub

1 | !pip install paddlehub -U -i https://pypi.tuna.tsinghua.edu.cn/simple #用了清华的镜像源 |

Looking in indexes: https://pypi.tuna.tsinghua.edu.cn/simple

Requirement already satisfied: paddlehub in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (2.2.0)

…

(为节省版面删去刷屏部分)

2、设置GPU环境

1 | %env CUDA_VISIBLE_DEVICES=0 |

env: CUDA_VISIBLE_DEVICES=0

3、导入相应的库

1 | import cv2 |

4、选择视频及模板

Tips:可在此处更改风格哦

这里可以更换很多风格,想了解更多风格,请点击此处

1 | # input_video = 'test.mp4' |

2

3

E0812 16:53:29.861804 1550 analysis_config.cc:80] Please compile with gpu to EnableGpu()

W0812 16:53:29.861893 1550 analysis_predictor.cc:1145] Deprecated. Please use CreatePredictor instead.

5、将视频转化为图片

Tips:可以用ls work/mp4_img | wc -w命令到终端看一下完成的图片数量

1 | def transform_video_to_image(video_file_path, img_path): |

1 | # 将视频中每一帧保存成图片 |

6、将图片转换风格

备注:运行时间可能会很久哦

Tips:可以用ls work/mp4_img3 | wc -w命令到终端看一下完成的图片数量

1 | def get_combine_img(input_file_patha): |

1 | # def transform(): |

1 | def transform(): |

1 | transform() |

work/mp4_img/0.jpg

7、将图片合成为视频

1 | def combine_image_to_video(comb_path, output_file_path, fps, is_print=False): |

1 | import time |

1 | combine_image_to_video('work/mp4_img3/', 'work/mp4_analysis.mp4' ,fps) |

8、添加原有音频

1 | ! ffmpeg -i test.mp4 -vn work/video.mp3 |

10、清除临时数据

1 | ! rm -rf ./work/mp4_img/* |

关于作者

😃姓名:曾焯淇😃

😃学历:高中😃

😃From:广东 佛山(欢迎面基)😃

我在AI Studio上获得黄金等级,点亮3个徽章,来互关呀~ https://aistudio.baidu.com/aistudio/personalcenter/thirdview/233221

问题

如果上述代码可以运行成功,恐怕这篇博客就不会这么长了——可惜它不能。每次运行到转换部分时,系统就会卡死,然后提示 kernel 自动重启,具体地说无法执行下述代码:

1 | model.style_transfer(images=[cv2.imread(input_file_path)],visualization=True,output_dir=output_file_path) |

首先排除代码问题。因为官方给的例子是这样的:

1 | # 转换为新海诚《你的名字》、《天气之子》风格图片 |

这个例子已经无法运行了:

1 | [2022-08-12 17:11:15,815] [ WARNING] - The _initialize method in HubModule will soon be deprecated, you can use the __init__() to handle the initialization of the object |

所以我猜测原因可能会是以下几点中的一点或多点:

Paddle框架存在不同版本间的兼容问题AI Studio基础版运行环境给的内存太小AI Studio基础版给的CPU太慢

所以此次尝试是失败的。不过注意到它使用的模型是AnimeGANv2,这倒启发我去github上找答案。于是,三下三下五除二找到了AnimeGANv2,于是有了后文。

使用 Github 上的开源项目 (失败)

animegan2-pytorch

在执行

1 | #@title Face Detector & FFHQ-style Alignment |

中,报

1 | --------------------------------------------------------------------------- |

于是

1 | pip install dlib |

但

1 | Collecting dlib |

于是我放弃了

Fast Style Transfer in TensorFlow

这项目的ReadMe中写得真好:

1 | ### Stylizing Video |

细看:Requirements中TensorFlow 0.11.0太老了吧,算了算了;还有“Models for evaluation are located here”里面存放模型的链接打不开。

CCPL

项目ReadMe:

1 | ### Pre-trained Models |

pre-trained models 无法下载。

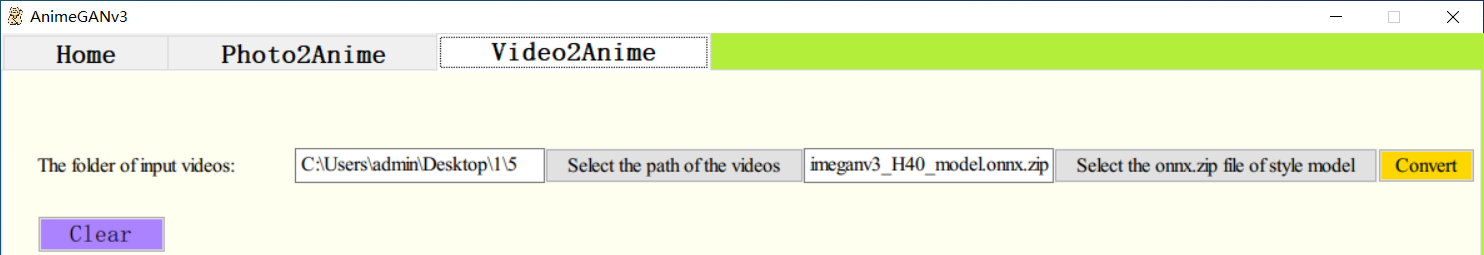

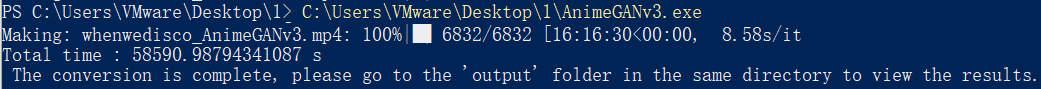

使用 AnimeGANv3

在AnimeGANv2项目主页中,发现了AnimeGANv3。AnimeGANv3是一个尚在研发之中的项目,根据其主页介绍可以发现它尚未开源,仅提供了几个.exe程序来制作 Demo。下面将利用这个项目制作视频。

步骤

下载原视频

1

2dotnet tool install --global BBDown

BBDown -tv https://www.bilibili.com/video/BV1SB4y1y7GQ下载

AnimeGANv3项目。该项目内不直接提供源代码,但提供使用pyinstaller打包而成的可以直接进行图片与视频风格迁移的.exe程序1

git clone https://github.com/TachibanaYoshino/AnimeGANv3.git

开始风格迁移。按软件界面提示操作,依次选择

Vedio2Anime、video、model,等待亿下,直至转换完成即可。1

./AnimeGANv3/AnimeGANv3.exe

存在的问题

首先是转换之后的风格。以封面为例我们来看看问题所在:

- 原封面:

![cover]()

- 转换后的封面:

![animeganV3-cover]()

我们发现,图片右下角衿儿的嗨丝就像没有被GAN处理过一样。更糟糕的是,在 B 站压缩封面画质、移动端缩小展示大小后,生成的图片已实际与原图差别不大。同样的问题不止发生在封面,而是贯穿整个视频。作为比较,我们还将较早前animegan的模型拿出来进行生成,缺陷部分未见明显改善。

对于这一点,评论区的带火见仁见智,但是我觉得还是尽可能要改进一下的。

使用 DCT-Net

就在 B 站上投完稿之后的第二天早上我便刷到了介绍阿里达摩院modelscope的视频,视频中不担介绍了modelscope,还介绍了DCT-Net。这次这个模型宣传效果挺好。用网页版 API 试了试最起码效果比AnimeGANv3好,所以我想着把之前的缺点改进一下。

SageMaker (失败)

首先进入Amazon SageMaker Studio Lab,反手开干:

1 | import os |

1 | --------------------------------------------------------------------------- |

网上一搜,反手一个

1 | apt-get install libglib2.0-dev |

1 | E: Could not open lock file /var/lib/dpkg/lock-frontend - open (13: Permission denied) |

再来:

1 | sudo apt-get install libglib2.0-dev |

1 | bash: sudo: command not found |

没救了,opencv都导入不成功了,还是改kaggle吧。

kaggle

1 | import os |

2

3

/kaggle/input/draftmp4/example.jpg

/kaggle/input/draftmp4/real.jpg

1 | !mkdir -p ./mp4_img ./mp4_img3 ./output |

2

3

4

5

Collecting modelscope[cv]

Downloading https://modelscope.oss-cn-beijing.aliyuncs.com/releases/v0.3/modelscope-0.3.4-py3-none-any.whl (1.0 MB)

...

缩减输出清版面

1 | import os |

1 | input_video = '../input/draftmp4/whenwedisco.mp4' |

1 | def transform_video_to_image(video_file_path, img_path): |

视频图片保存成功, 共有 6832 张

1 | import cv2 |

2

3

4

5

6

7

8

9

10

11

12

13

14

2022-08-15 03:53:52,776 - modelscope - INFO - TensorFlow version 2.6.4 Found.

2022-08-15 03:53:52,777 - modelscope - INFO - Loading ast index from /root/.cache/modelscope/ast_indexer

...

缩减输出清版面

2022-08-15 03:54:42.831239: W tensorflow/core/common_runtime/bfc_allocator.cc:272] Allocator (GPU_0_bfc) ran out of memory trying to allocate 2.98GiB with freed_by_count=0. The caller indicates that this is not a failure, but may mean that there could be performance gains if more memory were available.

No face detected!

./mp4_img/0.jpg

...

缩减输出清版面

No face detected!

finished!

1 | def combine_image_to_video(comb_path, output_file_path, fps, is_print=False): |

finished!

1 | import time |

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

built with gcc 9 (Ubuntu 9.4.0-1ubuntu1~20.04.1)

configuration: --prefix=/usr --extra-version=0ubuntu0.1 --toolchain=hardened --libdir=/usr/lib/x86_64-linux-gnu --incdir=/usr/include/x86_64-linux-gnu --arch=amd64 --enable-gpl --disable-stripping --enable-avresample --disable-filter=resample --enable-avisynth --enable-gnutls --enable-ladspa --enable-libaom --enable-libass --enable-libbluray --enable-libbs2b --enable-libcaca --enable-libcdio --enable-libcodec2 --enable-libflite --enable-libfontconfig --enable-libfreetype --enable-libfribidi --enable-libgme --enable-libgsm --enable-libjack --enable-libmp3lame --enable-libmysofa --enable-libopenjpeg --enable-libopenmpt --enable-libopus --enable-libpulse --enable-librsvg --enable-librubberband --enable-libshine --enable-libsnappy --enable-libsoxr --enable-libspeex --enable-libssh --enable-libtheora --enable-libtwolame --enable-libvidstab --enable-libvorbis --enable-libvpx --enable-libwavpack --enable-libwebp --enable-libx265 --enable-libxml2 --enable-libxvid --enable-libzmq --enable-libzvbi --enable-lv2 --enable-omx --enable-openal --enable-opencl --enable-opengl --enable-sdl2 --enable-libdc1394 --enable-libdrm --enable-libiec61883 --enable-nvenc --enable-chromaprint --enable-frei0r --enable-libx264 --enable-shared

libavutil 56. 31.100 / 56. 31.100

libavcodec 58. 54.100 / 58. 54.100

libavformat 58. 29.100 / 58. 29.100

libavdevice 58. 8.100 / 58. 8.100

libavfilter 7. 57.100 / 7. 57.100

libavresample 4. 0. 0 / 4. 0. 0

libswscale 5. 5.100 / 5. 5.100

libswresample 3. 5.100 / 3. 5.100

libpostproc 55. 5.100 / 55. 5.100

Input #0, mov,mp4,m4a,3gp,3g2,mj2, from '../input/draftmp4/whenwedisco.mp4':

Metadata:

major_brand : isom

minor_version : 512

compatible_brands: isomiso2avc1mp41

encoder : Lavf58.76.100

description : Bilibili VXCode Swarm Transcoder v0.7.17

Duration: 00:03:48.07, start: 0.000000, bitrate: 6469 kb/s

Stream #0:0(und): Video: h264 (High) (avc1 / 0x31637661), yuv420p(tv, bt709), 1920x1080, 6334 kb/s, 29.97 fps, 29.97 tbr, 30k tbn, 59.94 tbc (default)

Metadata:

handler_name : VideoHandler

Stream #0:1(und): Audio: aac (LC) (mp4a / 0x6134706D), 44100 Hz, stereo, fltp, 128 kb/s (default)

Metadata:

handler_name : SoundHandler

Stream mapping:

Stream #0:1 -> #0:0 (aac (native) -> mp3 (libmp3lame))

Press [q] to stop, [?] for help

Output #0, mp3, to './output/mp4_analysis.mp3':

Metadata:

major_brand : isom

minor_version : 512

compatible_brands: isomiso2avc1mp41

description : Bilibili VXCode Swarm Transcoder v0.7.17

TSSE : Lavf58.29.100

Stream #0:0(und): Audio: mp3 (libmp3lame), 44100 Hz, stereo, fltp (default)

Metadata:

handler_name : SoundHandler

encoder : Lavc58.54.100 libmp3lame

size= 3564kB time=00:03:48.07 bitrate= 128.0kbits/s speed= 29x

video:0kB audio:3564kB subtitle:0kB other streams:0kB global headers:0kB muxing overhead: 0.011508%

finished!

ffmpeg version 4.2.7-0ubuntu0.1 Copyright (c) 2000-2022 the FFmpeg developers

built with gcc 9 (Ubuntu 9.4.0-1ubuntu1~20.04.1)

configuration: --prefix=/usr --extra-version=0ubuntu0.1 --toolchain=hardened --libdir=/usr/lib/x86_64-linux-gnu --incdir=/usr/include/x86_64-linux-gnu --arch=amd64 --enable-gpl --disable-stripping --enable-avresample --disable-filter=resample --enable-avisynth --enable-gnutls --enable-ladspa --enable-libaom --enable-libass --enable-libbluray --enable-libbs2b --enable-libcaca --enable-libcdio --enable-libcodec2 --enable-libflite --enable-libfontconfig --enable-libfreetype --enable-libfribidi --enable-libgme --enable-libgsm --enable-libjack --enable-libmp3lame --enable-libmysofa --enable-libopenjpeg --enable-libopenmpt --enable-libopus --enable-libpulse --enable-librsvg --enable-librubberband --enable-libshine --enable-libsnappy --enable-libsoxr --enable-libspeex --enable-libssh --enable-libtheora --enable-libtwolame --enable-libvidstab --enable-libvorbis --enable-libvpx --enable-libwavpack --enable-libwebp --enable-libx265 --enable-libxml2 --enable-libxvid --enable-libzmq --enable-libzvbi --enable-lv2 --enable-omx --enable-openal --enable-opencl --enable-opengl --enable-sdl2 --enable-libdc1394 --enable-libdrm --enable-libiec61883 --enable-nvenc --enable-chromaprint --enable-frei0r --enable-libx264 --enable-shared

libavutil 56. 31.100 / 56. 31.100

libavcodec 58. 54.100 / 58. 54.100

libavformat 58. 29.100 / 58. 29.100

libavdevice 58. 8.100 / 58. 8.100

libavfilter 7. 57.100 / 7. 57.100

libavresample 4. 0. 0 / 4. 0. 0

libswscale 5. 5.100 / 5. 5.100

libswresample 3. 5.100 / 3. 5.100

libpostproc 55. 5.100 / 55. 5.100

./mp4_analysis.mp4: No such file or directory

导出视频我们发现视频的码率不一,没办法,只能用格式工厂把转完之后的图像一帧一帧地合成视频后再用 Pr 调速度并添加音频后发布(直接用格式工厂加音频会出错),最后终于正常了。

总结与反思

新模型是先对图片做 image segmentation 辨认人脸,再对人脸部分做 style transfer 。这样带来的问题是显而易见的:

上图中,一张脸被 transfer 了而另一张脸没被 transfer。

先这样吧。后面实在不行改用 diffusion 的 generative 的 model 试试。